eScience Lectures Notes : ManyPage

From QuicktimeVR to MPEG4

Introduction: From QuicktimeVR to MPEG4

|

|

From QuicktimeVR to MPEG4: Some tools and formats

Cheap Networked VR :

Without or with low level of sharing

With low level of immersion

From simple tools to basic bricks to build complex NVR

Hugh Fisher : "without any graphics, you may experience the feeling of community" (Multi User Donjon)

What we may begin with ..."without complex sharing, we may experience a feeling of exploration of virtual world"

File Format, Graphic Library/programming Language, Development Toolkit ...

3D File Format, Graphic Library/programming Langage

Rendering and Graphics

These standards are mainly concerned with graphics and rendering of 3D scene

graphs.

They have very little or no consideration about communication between users

and other networking related issues.

QuicktimeVR

The graphics libraries from SGI

DirectX

VRML : Virtual Reality Modeling Langage

VRML like...

Java 3D, JOGL

Apple QuicktimeVR (a.k.a. QTVR)

Realistic Immersion

Cheap

360ish degrees

QuicktimeVR is a technology that allows users to look around and from within a cylinder or a sphere of stitched 2D pictures in order to feel inside somewhere.

Creation

|

|

QTVR examples

Terrace, Pool, Reception, Bar, Restaurant and magnificent views of Dunk Island and the Coral Sea. |

|

At 2228 meters above sea level Mount Kosciuszko is Australia's highest peak.

|

Imagina 2000

Online : http://imagina.ina.fr/Imagina/2000/3D/QuickTimeVR/scene_1_vers_2.fr.html

Local : /INA/Imagina/2000/3D/QuickTimeVR/scene_1_vers_2.fr.html

The graphics libraries from SGI

-

OpenGL is a graphical library introduced by Silicon Graphics in 1992

-

Allow developers to write a single piece of code which is supposed to run in various platforms (as long as they have an OpenGL library implementation)

-

Broadly used

-

Hardware acceleration

-

Not OO

-

Rendering and Graphics

-

OpenGL Architecture Review Board, http://www.opengl.org

OpenGL |

|

Open InventorThe OO format of SGIIntroduction of a Scene Graph |

|

OpenGL PerformerOpenGL Performer provides a powerful and extensible programming interface (with ANSI C and C++ bindings) for creating real-time visual simulation and other interactive graphics applications. |

|

OpenGL Multipipe

|

|

OpenGL is a graphical library introduced by Silicon Graphics in 1992 to allow developers to write a single piece of code, based on the OpenGL API2, which is supposed to run in various platforms (as long as they have an OpenGL library implementation).

Since its inception OpenGL has been controlled by an Architectural Review Board whose representatives are from the following companies: 3DLabs, Compaq, Evans & Sutherland (Accelgraphics), Hewlett-Packard, IBM, Intel, Intergraph, NVIDIA, Microsoft, and Silicon Graphics.

Most of the Computer Graphics research (and implementation) broadly uses OpenGL which has became a de facto standard. Virtual Reality is no exception to this rule.

OpenGL Architecture Review Board, http://www.opengl.org

There are many options available for hardware acceleration of OpenGL based applications. The idea is that some complex operations may be performed by specific hardware (an OpenGL accelerated video card such as those based on 3DLab’s Permedia series or Mitsubishi’s 3Dpro chipset for instance) instead of the CPU which is not optimized for such operations. Such acceleration allows low-end workstations to perform quite well yet at low cost.

We will see that such is the importance of OpenGL that both VRML and Java3D are built on top of it, i.e. if a given workstation has hardware support for OpenGL the VRML browser and Java3D, will also benefit from it.

These standards are mainly concerned with graphics and rendering of 3D scene graphs. They have very little or no consideration about communication between users and other networking related issues.

DirectX

Direct3D

Windows specific

Some original things, patented by microsoft

(Vector mapping ?)

Microsoft DirectX® is a group of technologies designed by Microsoft to allow Windowsbased computers to run and display applications rich in multimedia elements such as fullcolor graphics, video, 3-D animation, and surround sound. DirectX is an integral part of Windows 98 and Windows 2000, as well as Microsoft® Internet Explorer 4.0. DirectX components may also be installed in Windows 95 as an optional package.

DirectX allows a compliant application to run in any Windows based system, independent of particularities of hardware of each system. In some sense it seems similar to OpenGL; however there is a logical limitation in disponibility as it is a Windows specific component.

DirectX accomplishes its task via a multilayered structure. The Foundation layer is responsible for resolving any hardware dependent issue. DirectX also allows developers to deploy creation and playback of multimedia content via DirectX’s Media layer. A third layer, Component, complets the high level protocol layer stack.

Some VRML browsers also provide a Direct3D based version

(as well as the common

OpenGL). A good example is blaxxun’s Contact 4.04

VRML : Virtual Reality Modeling Language

-

In 1994 : From 2D to 3D on the Net

-

In 1997 : VRML 2.0 : ISO standard (ISO/IEC 14772-1:1997)

-

Based on Open Inventor and on concept of Scene Graph

-

Use VRML Browser

The source of limitation ? -

Interaction and behaviour and ROUTE

-

Include the Network, with the URLs

-

File Format, with a External Authoring Interface (EAI) and Script Node

-

Main quality and main imperfection : it is a text file !

VRML : Examples

-

A very simple example : a tree

-

Monaco : /lecture/ivr/Web3D/vrmlImagina/

-

Some demos from cortona : http://www.parallelgraphics.com/products/cortonamacosx

-

Nadeau's slide : "Introduction to VRML97"

VRML 2.0, which is the latest version of the well-known VRML format, is an ISO

standard (ISO/IEC 14772-1:1997). Having a huge installed base, VRML 2.0 has

been designed to support easy authorability, extensibility, and capability of

implementation on a wide range of systems. It defines rules and semantics for

presentation of a 3D scene.

Using any VRML 2.0 compliant browser, a user can simply use a mouse to navigate through a virtual world displayed on the screen. In addition, VRML provides nodes for interaction and behavior. These nodes, such as TouchSensor and TimeSensor, can be used to intercept certain user interactions or other events which then can be ROUTed to corresponding objects to perform certain operations.

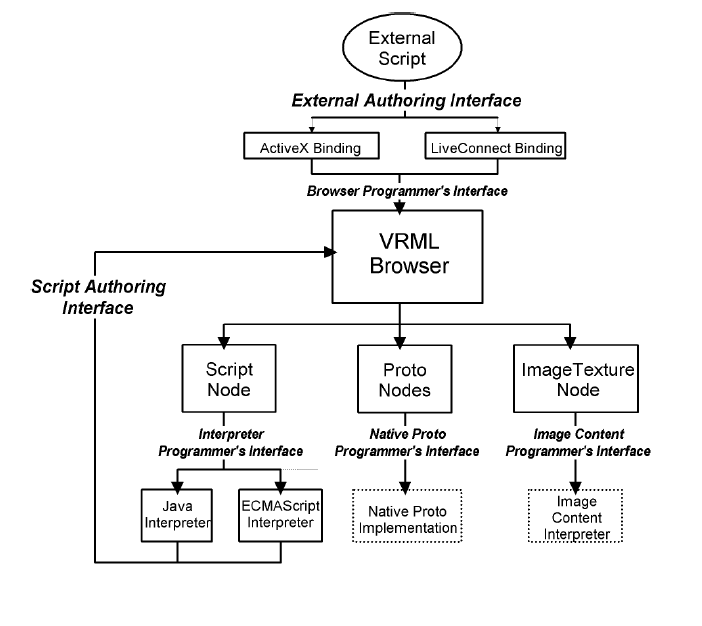

Moreover, more complex actions can take place using Script

nodes which are used to write programs that run inside the VRML world. In addition

to the Script node, VRML 2.0 specifies an External Authoring Interface (EAI)

which can be used by external applications to monitor and control the

VRML environment. These advanced features enable a developer to create an interactive

3D environment and bring the VRML world to life.

VRML like...

After the fall of interest in VRML....

-

Flash3D

-

Cult3D still alive

-

Demicron (Java Based)

-

3D AnyWhere

-

Blaxxun3D

-

MetaStream / MetaCreation / ViewPoint ...scalable open file format

-

Caligari ..................

-

And Again QTVR ... and Quicktime :

-

Axel from Mindavenue : http://www.mindavenue.com/

-

- See http://www.cs.unc.edu/~isenburg/research/3DforEB/

VRML directions ... ?

-

Adobe atmosphere

-

03/12/01 Build 67 is now available! (No news since last december !)

- "Adobe enters VR market" : http://www.vrnews.com/issuearchive/vrn1003/vrn1003front.html

-

-

Virtools http://www.virtools.com/applications/index_mac_demos.asp

-

X3D, from the Web3D Consortium

-

New! July 23, 2002

Web3D Consortium Releases X3D Final Working Draft

Call for Implementations in advance of International Standard submission

X3D SDK available from Web3D Consortium at SIGGRAPH 2002

Web3D Consortium establishes Java Rendering Working Group to define graphics API bindings for Java

-

Java 3D

-

Part of the Java Media APIs

-

High level constructs for creating, on the fly, and manipulating, 3D geometry in a platformindependent way

-

Controlling the virtual world is very easy

-

VRML is aimed at a presentational application vs Java3D Java language API

-

Performance and interactivity

-

A VRML Browser in Java 3D ?

-

Futur of Java3D ?

Java 3D is part of the Java Media APIs developed by Javasoft. Providing developers with high level constructs for creating and manipulating 3D geometry in a platformindependent way, the Java 3D API is a set of classes for writing three-dimensional graphics applications and 3D applets.

Since a Java3D program runs at the same level as any other Java program/applet

in the virtual machine, controlling the virtual world

becomes very easy through calling the Java3D API from any Java program. Although

Java3D and VRML both appear to target the same application area, they have fundamental

differences. VRML is aimed at a presentational application area and includes

some support for runtime programming operations through its External Authoring

Interface and the Script node, as mentioned earlier. Java 3D; however, is specifically

a Java language API, and is only a runtime API. Java 3D does not define a file

format of its own and is designed to provide support for applications that require

higher levels of performance and interactivity, such as real-time games and

sophisticated mechanical CAD applications. In this sense, Java 3D provides a

lower-level, underlying platform API.

Many VRML implementations can be layered on top of Java 3D. In fact, it is possible to write a VRML browser using Java 3D, such as the browser developed by VRML consortium’s Java3D and VRML Working group.

Future of Java3D : JOGL

https://jogl-demos.dev.java.net/

-

Part of the Java Media APIs ? No : BSD License

-

High level constructs for creating, on the fly, and manipulating, 3D geometry in a platform independent way ? No : Low Level OpenGL bindings

-

Performance and interactivity ? : Yes : targeted at games developments

-

A VRML Browser in Java 3D ? No a X3D Browser in JOGL

-

JOGL provides full access to the APIs in the OpenGL 1.4 specification as well as nearly all vendor extensions, and integrates with the AWT and Swing widget sets.

-

Co-existence of Java 2D, Java3D, JOGL ...

-

Another possibility : Xith3d : High performance scene graph and renderer for gaming : https://xith3d.dev.java.net/

-

Trend : towards low level programming (hardware : programmable pipeline - Vertex Shader, Fragment shader), using CG : NVidia Shading Language (C Like, Hardware independent)

Chris Campbell (July 28, 2003 9:46PM PT)

URL: http://weblogs.java.net/pub/wlg/278

» Permalink

[I was going to reply to Chris's excellent weblog in the talkback section, but

I started rambling and it touched on some other thoughts I've had, so I decided

to ramble here instead... Keep in mind that I'm only half-wearing my Sun cap

right now (kind of like one of those green and brown, half A's, half Giants

caps that were popular in the '89 Series), so I'm not speaking entirely on behalf

of the company.]

In response to Chris Adamson's recent blog entry, The End (of Java3D) and the

Beginning (of JOGL) :

I'd just like to point out that JOGL is not an all-out replacement for Java3D.

The two can co-exist, and one could potentially rewrite the platform-specific

layer of J3D to sit atop JOGL. Java3D does indeed act as an "isolation

layer" for the underlying platform when a developer uses its "immediate

mode" APIs, but more importantly Java3D offers a high-level scene graph

API. Many educational and corporate institutions have chosen Java3D because

of its scene graph offerings, in addition to the appeal of its cross-platform

nature.

On the other side of the coin you have the traditional game shops, who want

to get as close to the graphics platforms/hardware as possible. Many of these

folks are finding JOGL a better fit because it's a lower-level API, and they

can make use of their existing OpenGL knowledge/code base. So I think it depends

on the type of application you're developing which API best suits your needs.

The gaming community has been clamoring for official Java bindings for OpenGL

for quite some time, so that's where Sun's efforts seem to be heading, but don't

count Java3D out for good; it still serves its purpose quite well as a higher

level 3D graphics library.

Related to this discussion, we're also starting to see some folks on the javagaming.org

forums asking whether JOGL would be a better fit than Java 2D for their apps/games.

Again, JOGL is not the end-all and be-all Java graphics library. Many people

don't realize that OpenGL is actually an expressive 2D library, despite its

tight association with the 3D world. However, there's so much more to 2D graphics

than rendering lines and sprites really fast (think medical imaging, complete

support for any image format or color/sample model, printing, text rendering,

stable offscreen rendering, etc). This is where Java 2D really blows the proverbial

socks off all the other 2D libraries out there.

My answer to those folks on javagaming.org is the same as my J3D response: Java

2D is a higher-level, easier-to-use, more robust, more full-featured 2D rendering

API than JOGL. Like J3D, the two technologies can play well together (if we

do our job correctly, there should be no reason why the two API's couldn't be

used in the same application). Also like J3D, we use hardware-accelerated graphics

libraries (such as Direct3D and OpenGL) under the hood, so for many applications,

performance should be virtually the same whether you use Java 2D or JOGL. As

I mentioned earlier for J3D, we could also port our OpenGL-based Java 2D pipeline

to sit atop JOGL (in fact, we're exploring this idea for a future release, which

should further decrease our dependence on native C code). If you want to access

the very latest in hardware technology, such as programmable shaders, or if

you have large data sets of vertices and you're comfortable with the increased

complexity of OpenGL, then JOGL would certainly be a better fit.

So the choice is yours... Each API has its benefits; it's up to you to evaluate

which one is best-suited for your next project! Chris Campbell is an engineer

on the Java 2D Team at Sun Microsystems, working on OpenGL hardware acceleration

and imaging related issues.

VPython (Ex Visual Python)

3D Programming for Ordinary Mortals

VPython includes:

-

The Python programming language

-

An enhanced version of the Idle interactive development environment

-

"Visual", a Python module that offers real-time 3D output, and is easily usable by novice programmers

-

VPython is free and open-source.

-

VPython has been ported on the Wedge by a eScience Student : Shaun Press, with the help of Hugh Fisher

Here is a complete VPython program that produces a 3D animation of a red ball bouncing on a blue floor. Note that in the "while" loop there are no graphics commands, just computations to update the position of the ball and check whether it hits the floor. The 3D animation is a side effect of these computations.

from visual import *

floor = box (pos=(0,0,0), length=4, height=0.5, width=4, color=color.blue)

ball = sphere (pos=(0,4,0), radius=1, color=color.red)

ball.velocity = vector(0,-1,0)

dt = 0.01

while 1:

rate (100)

ball.pos = ball.pos + ball.velocity*dt

if ball.y < ball.radius:

ball.velocity.y = -ball.velocity.y

else:

ball.velocity.y = ball.velocity.y - 9.8*dt

The program starts by importing the module "visual" which enables 3D graphics.

A box and a sphere are created and given names "floor" and "ball" in order to be able to refer to these objects.

The ball is given a vector velocity.

In the while loop, "rate(100" has the effect "do no more than 100 iterations per second, no matter how fast the computer."

The ball's velocity is used to update its position, in a single vector statement that updates x, y, and z.

Visual periodically examines the current values of each object's attributes, including ball.pos, and paints a 3D picture.

The program checks for the ball touching the floor and if necessary reverses the y-component of velocity,

otherwise the velocity is updated due to the gravitational force.

Other Languages

CG

or how to program the graphic card to change the rendering pipeline

Java3D, VRML, openInventor ... propose a Scene graph to oganise 3D data

There is not such a thing in OpenGL (nr JOGL)

... =>

Open Scene Graph

OpenSG

Communications Middleware

Standards in this category focus on the issues concerning connecting users

together on

the network to create shared worlds. Although some of them also provide graphical

capabilities, their main target is networking, world status updating, and object-sharing

capabilities.

Living Worlds

-

Working Group of the VRML Consortium

-

To define a set of VRML 2.0 conventions that support applications which are multiuser and interoperable. “Scenesharing"

-

LW is a first attempt to devise a common VRML 2.0 interface to support basic interaction in multi-user virtual scenes and enables each participant to know that someone has arrived, departed, sent a message or changed something in the scene.

-

No (user friendly) implementations of LW

LivingSpace: A Living Worlds Implementation using an Event-based Architecture -

Dr. Bob Rockwell of Blaxxun Interactive : ex co-chair list. He passed away on April 8th 1998

Living Worlds (LW) is a Working Group of the VRML Consortium, supported by a

large number of organizations. The LW effort aims to define a set of VRML 2.0

conventions that support applications which are multiuser and interoperable.

“Scenesharing", which is concerned with the coordination of events

and actions across the network, is one of the main elements of LW. In short,

LW is a first attempt to devise a common VRML 2.0 interface to support basic

interaction in multi-user virtual scenes and enables each participant to know

that someone has arrived, departed, sent a message or changed something in the

scene.

Although LW specifies rules for object sharing and exchanging

update messages across the network, it is not a communications middleware

and the reason it is being presented in this section is that it is also not

concerned with graphics and rendering. In fact LW does not care about the actual

technical implementation of the communications system that enables world sharing.

Referred to as the Multi User Technology (MuTech), the actual system that runs

on the network and is responsible for message passing among clients can be developed

by any technology as long as its interface to the VRML world follows the LW

specifications. Currently no implementations of LW are publicly available.

http://www.vrml.org/WorkingGroups/living-worlds/

Open Community

A proposal of standard for multiuser enabling technologies from Mitsubishi Electric Research Laboratories (MERL)

Spline (Scalable Platform for Large Interactive Networked Environments) is an implementation compliant with OC which provides a library with ANSI C and Java API.

.... 1997

Open Community (OC) is a proposal of standard for multiuser enabling technologies from Mitsubishi Electric Research Laboratories. Spline (Scalable Platform for Large Interactive Networked Environments) is an implementation compliant with OC which provides a library with ANSI C and soon Java API. Such library provides very detailed and essential services for real-time multi-user cooperative applications. For its communication, Spline uses the Interactive Sharing Transfer Protocol (ISTP) which is a hybrid protocol supporting many modes of transportation for VR data and information, namely

Spline uses the Interactive Sharing Transfer Protocol (ISTP) which is a hybrid protocol supporting many modes of transportation for VR data and information:

-

1-1 Connection Subprotocol: Used to establish and maintain a TCP connection

between two ISTP processes; -

Object State Transmission Subprotocol: Used to communicate the state of objects from one ISTP process to another. Such updates may be sent via 1-1 Connection or UDP Multicast (1-1 Connection is used as a backup route);

-

Streaming Audio Subprotocol: Used to stream audio data via RTP;

-

Locale-Based Communications Subprotocol: This is the core of ISTP and supports the sharing of information about objects in the world model;

-

And Content-Based Communication Subprotocol: Supports central server style communication of beacon information. Beacons are tags through which is it possible to retrieve a given object (similar to an URL).

The last two subprotocols are build upon the other three. ISTP does not provide videostreaming capability to date, however such support could be provided by extending ISTP with an extra appropriated subprotocol.

Virtual Communities

Active Worlds : http://www.activeworlds.com/ and Alpha World

Paris Second World http://www.2nd-world.fr/

SCOL de Cryo Networks http://www.cryo-networks.com/ and Cryopolis...

Cryo : liquidation judiciaire prononcée

Business - 2 Octobre 2002Cryo Networks à la corbeille !

Business - 26 Septembre 2000Philippe Ulrich et la théorie des Mantas

Blaxxun contact

Deep Matrix

La solution Open Source de Geometrek est peut être

une alternative ou un point de départ pour ceux qui veulent mettre au

point une communauté virtuelle sans avoir à être dépendant

d'un éditeur. Le serveur et le client sont développés en

JAVA, Deep Matrix est compatible avec les plug in VRML les plus courants (Cortona,

Blaxxun, et CosmoPlayer), plug in qu'il utilise comme simple moteur 3D. Vous

l'avez compris, la solution est très ouverte, elle est en plus gratuite

pour une utilisation non commerciale.

VNET

Voici un autre serveur multi-utilisateurs Open Source.

Il est bâti sur la même architecture que Deep Matrix : un serveur

en Java, un client Java avec un plug in VRML pour afficher le monde virtuel.

Le protocole de partage de monde (déplacement d'objets, message privé,

événements partagés) est intéressant. VNET vous

est fourni avec ses sources.

Actualité :

VNET et Deep Matrix sont des solutions qui sont abandonnées depuis l'année

1999.

Suite aux problèmes financiers de Blaxxun, beaucoup de créateurs

de mondes virtuels ne savent pas comment continuer à partager leurs créations,

si Blaxxun venait à fermer son serveur gratuit. Certains groupes d'utilisateurs

s'organisent actuellement pour "déterrer" VNET et DeepMatrix.

Ces deux solutions sont effet stables et pleines de promesses, elles pouraient

constituer une alternative à la solution gratuite de Blaxxun. Espèrons

tout de même que Blaxxun survive à la tempête !

Games

Doom, Quakes... STU

EverQuest : http://everquest.station.sony.com/

XPilot http://www.xpilot.org/ (2D only)

High Level Architecture (HLA)

HLA is the spiritual successor to DIS, although it focuses more closely on

the

problems of arranging very large-scale simulations rather than the run-time

distribution of data.

DIS / HLA - military standards tailored to the requirements of simulation and war games

Framework for distributed simulation systems developed by the U.S. Defense Modeling and Simulation Office

Defines standard services and interfaces to be used by all participants in order to support efficient information exchange

The High Level Architecture (HLA) is a software architecture for creating computer simulations out of component simulations. The HLA provides a general framework within which simulation developers can structure and describe their simulation applications.

HLA is a framework for distributed simulation systems

developed by the U.S. Defense Modeling and Simulation Office (DMSO). HLA attempts

to provide a very generic environment that any virtual object can attach to

in order to participate in a simulation. It is a very well-thought architecture

that defines standard services and interfaces to be used by all participants

in order to support efficient information exchange. HLA is adopted as the facility

for Distributed Simulation Systems 1.0 by the Object Management Group (OMG)

and is now in the process of becoming an open standard through the IEEE.

HLA's Runtime Infrastructure (RTI) is a set of software components that implement

the services specifies by HLA. Today, a few RTI implementations for different

platforms are available.

If you want to learn HLA... http://www.ecst.csuchico.edu/~hla/courses.html

DIS / HLA - military standards tailored to the requirements of simulation and

war games. DIS is an

efficient, if inflexible, protocol for medium scale simulation. The imaginatively

named Higher Level

Architecture (HLA) is the spiritual successor to DIS, although it focuses more

closely on the

problems of arranging very large-scale simulations rather than the run-time

distribution of data.

HLA remains a hot topic in defence circles. Limitations of the specification

provide great

opportunities for the lab, with the prospect of serious defence dollars on offer

for a full simulation

infrastructure

Adam Martin wrote:

> From: "Lee Sheldon" <linearno@gte.net>

>> Ann Arbor, MI - October 2, 2001 - Cybernet Systems, an Ann

>> Arbor-based research and development firm, today announced the

>> availability of a new massive multi-player networking

>> architecture that enables developers to create online games in

>> which tens of thousands of players can simultaneously interact

>> in the same environment.

>> www.openskies.net/news/networkrelease.shtml

>> Anybody have any thoughts about this?

> Having read all their stuff, and looked at the technology, I'd say

> its not as interesting as they make it sound; my impression from

> what they are saying publicly is that they are merely rehashing

> some pretty old ideas from wide area network multimedia stream

> distribution, wrapping it up as "applicable to MMOGs" and trying

> to patent it. Will check out the patent when we can (they are

> potential competition to our work) but based on their "white

> paper" I'm rather disappointed by the disparity between the

> marketing hype and the reality.

They're one of a couple players in the field currently. Each of the

current products is taking a somewhat different approach, both in

technological details and the business model behind the company.

The above summary of Open Skies leaves out much of their history and

grounding in solid research and implementations. Open Skies bases

their product on HLA, the Department of Defense standard "High Level

Architecture" which was the successor to the older DIS

standards. Cybernet has been working in that field for years, so I'd

doubt that that part of their product is new or totally unproven.

(That's not something that I'd expect to be true for some of the

other products in the field.)

A good starting point for information on HLA itself is at

http://www.dmso.mil/hla

The High Level Architecture (HLA) is a general purpose

architecture for simulation reuse and interoperability. The

HLA was developed under the leadership of the Defense Modeling

and Simulation Office (DMSO) to support reuse and

interoperability across the large numbers of different types of

simulations developed and maintained by the DoD. The HLA

Baseline Definition was completed on August 21, 1996.

Now, as Adam described, in addition to that, they're also pitching

their distributed caching/etc system.

In their favor, they also have a lot more public documentation than

some, possibly all, of their competitors. Also, if more people were

to be following the HLA standards, it might be interesting to see to

what extent interoperability among the products (at the source level

if nothing else) might be possible or useful.

They don't appear to have a lot of direct game systems or anything

at that level pre-built and part of their package. They seem to

currently be focusing their product offerings at the lower levels,

with promises of more to come later and sample code that does some

of the game system type things. So it isn't something that

currently appears to be aiming at the same type of market as the

MMORPG Construction Kit. :)

Their licensing isn't very clear to me (without contacting them).

Some of their distributed caching/server technology appears at least

superficially to be similar to TerraPlay:

http://www.terraplay.com/.

Overall, I think there's a good bit of interesting fodder for

MUD-Dev discussion in their technology, if there's interest in that

sort of thing on the list. (I don't think HLA has ever really been

discussed on list? Or really any of the practical models for

scaling that are currently seeing commercial application.)

- Bruce

Java Shared Data Toolkit (JSDT)

Provides an abstract model of the network, designed specifically to support collaborative applications

Provides different modes of transportation

-

a reliable socket mode, RMI mode which uses Remote Method Invocation

-

a multicast mode that makes use of the Lightweight Reliable Multicast Protocol (LRMP)

(useful for shared application with a large number of participants). -

even http !

Pure Java, and hence 100% portable

Not specifically designed for 3D simulations and virtual environments

not tuned for high performance or low latency

Java Media Framework.

Allows you to manage audio and video streams within a Java program

Issue : most of the "real" work is done in native code (not portable).

Although not specifically designed for 3D simulations and virtual environments, JSDT is part of the Java Media APIs developed by Javasoft and provides real-time sharing of applets and/or applications. JSDT provides many facilities such as tokens that can be used for coordinating shared objects. It also provides different modes of transportation including a reliable socket mode, RMI mode which uses Remote Method Invocation, and a multicast mode that makes use of the Lightweight Reliable Multicast Protocol (LRMP) and is useful for shared application with a large number of participants.

Java and the Network...

Java and it’s associated media streaming and networking packages represent an alternative basis for developing collaborative virtual environments. The core class libraries provide socket and distributed object (RMI) models of communication. In addition, there are a number of standard Java extensions that provide specialised data distribution mechanisms. Two extensions are especially relevant to the task of building collaborative virtual environments: the Java Shared Data Toolkit and the Java Media Framework.

The Java Shared Data Toolkit (JSDT) provides an abstract model of the network, designed specifically to support collaborative applications.

"The JavaTM Shared Data Toolkit software is a development library that allows developers to easily add collaboration features to applets and applications written in the Java programming language."

"This is a toolkit defined to support highly interactive, collaborative applications written in the Java programming language."

The fundamental abstraction used in the JSDT is that of a shared byte buffer. These buffers are un-typed blocks of data, but with the use of Java object serialisation can be used to replicate complete graphs of objects. Buffer replication can be performed over a range of different network transports, including a reliable multicast system as well as RMI, sockets and even http. A great strength of JSDT is that it is pure Java, and hence 100% portable. The main limitation is that it is not tuned for high performance or low latency, so it’s suitability for building collaborative VEs is an open question. If object serialisation is used then JSDT will also have problems inter-operating with non-Java clients.

The second relevant extension is the Java Media Framework.

"The Java Media Framework (JMF) is an application

programming interface (API) for incorporating time-based media into Java applications

and applets."

"The Java Media Framework API specifies a unified architecture, messaging

protocol and programming interface for playback, capture and conferencing of

compressed streaming and stored timed-media including audio, video, and MIDI

across all Java Compatible platforms."

Essentially, JMF allows you to manage audio and video

streams within a Java program. The crucial point about JMF is that most of the

"real" work is done in native code. Native platform codecs are used

throughout which means that performance is excellent, but compatibility is harder

to achieve.

The combination of the core Java networking classes, JSDT, JMF, a portable execution

format (the Java class file) and automatic platform neutral serialisation mechanism

make Java a very attractive base on which to build collaborative VEs. As with

Java3D the principle concern is the runtime performance of Java. However, experiments

conducted by the authors with early implementations of Java indicate that network

bandwidth is a more common cause of performance bottlenecks : even a simple

interpreted JVM can saturate a 155Mbit ATM network. Interoperability problems

not-withstanding, Java should be considered an excellent environment for networking

applications.

Texte extract from a non published paper by Sam Taylor and Hugh Fisher

CavernSoft

CAVERN, the CAVE Research Network, is an alliance of industrial and research institutions equipped with immersive equipment and high-performance computing resources all interconnected by high-speed networks to support collaboration in design, training, visualization, and computational steering in virtual reality.

C++ hybrid-networking/database library optimized for the rapid construction of collaborative Virtual Reality applications

VR developers can quickly share information between their applications with very little coding

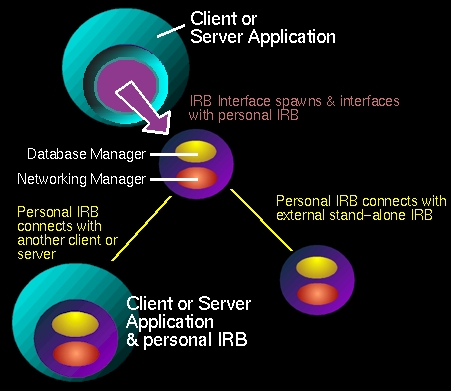

The Information Request Broker (IRB) is the nucleus of all CAVERN-based client and server applications

An IRB is an autonomous repository of persistent data driven by a database, and accessible by a variety of networking interfaces

CAVERNsoft is most succinctly described as a C++ hybrid-networking/database library optimized for the rapid construction of collaborative Virtual Reality applications. Using CAVERNsoft VR developers can quickly share information between their applications with very little coding. CAVERNsoft takes over the responsibility of making sure data is distributed efficiently.

The IRB

The Information Request Broker (IRB) is the nucleus of all CAVERN-based client and server applications. An IRB is an autonomous repository of persistent data driven by a database, and accessible by a variety of networking interfaces. The goal is to develop a hybrid system that combines a distributed shared memory model with distributed database technology and realtime networking technology under a unified interface to allow the construction of arbitrary collaborative VR (CVR) topologies.

http://www.evl.uic.edu/cavern/cavernsoft/

DIVE

The Distributed Interactive Virtual Environments project from the Swedish

Institute of

Computer Science is one of the original attempts to develop collaborative VE.

Allows its participants to navigate in 3D space and see, meet and interact with other users and applications.

Extensive use of Tcl

Provides persistence through the use of a centralised database server

Still under active development, DIVE is a well-known and widely respected toolkit. It is designed around the notion that the environment should serve both for interactions and for developing new material. It makes extensive use of Tcl to provide extensibility and provides persistence through the use of a centralised database server.

MPEG4

-

MPEG1 Video and Audio Compression (CDrom)

-

MPEG2 Video and Audio Compression

Better quality, but more computing ressource needed -

NO 3 .... Mpeg1 Layer3

-

MPEG4 Aggregation of all possible type of Media + improvement in low bandwidth video

description of the video in term of objects.-

Based on Quicktime for the mix of the different type of data

-

Based on VRML for the scene graph

-

Even Java is Part of MPEG4

-

-

Moving Picture Experts Group

-

Divx : a mix of different bricks comming from MPEG4

MPEG-4 has future potential for use in CVEs, but is not a viable technology

in the short to medium

terms, for the following reasons:

-

MPEG-4 is designed around a model of uni-directional one to one communication, with "beta"

support for one to many and no support for many to many links -

There is little or no provision for any complex interaction by the user with the MPEG-4 world. In

particular, there is no support for 6DOF input devices such as the Polhemus or haptic feedback -

There is no provision for interaction by multiple users within the same VE, for instance locking

mechanisms or conflict resolution -

Although MPEG-4 is design for real-time decoding, it is not designed for real-time encoding. There are profound differences between real-time and non real-time audio and video encoders. The experience of trying to build video conferencing tools on top of MPEG-2 suggests that any attempt to perform real-time interactions through MPEG-4 will be fraught with problems and severely

limited.

VRML-DIS-Java

A possibly successful approach may be to adopt the model of the VRML-DIS-Java working group of the Web3D consortium. This group has attempted to adapt the existing Distributed Interactive Simulation (DIS) military protocols, for use in building shared VRML worlds. They have made extensive use of Java to achieve this integration, and their results are quite compelling. The limitation of this approach is the same as that of any DIS based CVE : DIS is an excellent protocol for distributing information about a simulated battlefield, but it is utterly inadequate when interactions do not involve high-velocity projectiles, explosions or weapons of mass virtual destruction.

Not modified since 2000 : http://web.nps.navy.mil/~brutzman/vrtp/dis-java-vrml/

Not midified since 2002 : http://cgi.ncsa.uiuc.edu/cgi-bin/General/CC/irg/clearing/projectAbstract.pl?projid=850

What Else ?

VR dedicated package that will explore networking ?

WorldToolkit by Sens8

"WorldToolkit is the leading cross-platform real-time 3D development tool."

(World2World, the Client-Server... WorldUp)

Virtools worldtoolkit

OpenMASK (Modular Animation and Simulation Kit) is a platform for the development and execution of modular applications in the fields of animation, simulation and virtual reality.

VR Juggler - Open Source Virtual Reality Tools

Tiwi : only for the Wedge...

Very various paths to explore/listen

COVEN is a four-year European project that was launched with the objective of comprehensively exploring the issues in the design, implementation and usage of multi-participant shared virtual environments, at scientific, methodological and technical levels.

Avocado - developed at GMD, Avocado builds a field network abstraction over

the Performer scene

graph. Distribution is achieved primarily through the use of networked routes

between fields. This is

a very simplistic model for distribution, but one that works well for small

applications. Avocado is

built on the Ensemble toolkit from Cornell

3D WORKING GROUP : Parallel Graphics

The absence of a flexible, standard 3-D format represents one of those key

barriers. By working with industry leaders and graphics experts, we plan to

create a format that will do for 3-D on the Web what the JPEG format did for

digital photography on the desktop."

>Parallel Graphics

ParallelGraphics a rejoint le 3D Working Group dont nous vous parlions il y

a quelques jours. Ce groupement d'éditeurs devrait produire un standard

web 3D pour le monde de la CAO. Voici une partie des menbres : Intel Corporation,

3Dlabs Inc., Actify Inc., Adobe Systems, ATI Technologies, The Boeing Company,

Dassault Systemes, Lattice Technology, Mental Images, Microsoft Corporation,

Naval Postgraduate School, National Institute of Standards and Technology (NIST),

SGDL Systems, Inc., i3Dimensions, Tech Soft America/OpenHSF.

Patrick Gelsinger (Intel vice president and chief technology officer) à

déclaré à propos de cette alliance :"The absence

of a flexible, standard 3-D format represents one of those key barriers. By

working with industry leaders and graphics experts, we plan to create a format

that will do for 3-D on the Web what the JPEG format did for digital photography

on the desktop."

Web3D Working Groups

Web3D Consortium : http://www.web3d.org/

X3D DIS-XML Working Group

The DIS-XML workgroup is focused on developing Distributed Interactive Simulation

(DIS) support in X3D. The goal is to explore and demonstrate the viability of

DIS-XML networking support for X3D to open up the modeling & simulation

market to open-standards Web-based technologies.

The DIS Component of X3D is already part of the X3D specification

Other WG : http://www.web3d.org/x3d/workgroups/

Current Working Groups:

• X3D Conformance Program

• X3D Shaders

• GeoSpatial

• DIS-XML

• H-Anim

• X3D Source

X3D Focus Market Working Groups

• CAD

• Medical

• VizSim

QTVR other things

The one to ban : IPIX

The sued free tool : PanoTools by Helmut Dersch

Hot Spots : exploration of world

Associated Map

Java Versions

Example : PMVR TM (Patented Mappable VR)

-

PMVR http://www.duckware.com/pmvr/index.html#example

-

Hawaii Vacation http://www.idyll-by-the-sea.com/hawaii/index.html

The best place to begin with : http://www.panoguide.com/

iCinema projects ... QTVR on the wedge...

Le principe du panorama QT est d'assembler une succession

d'images prises à 360° autour de vous, afin d'en générer

une bande circulaire que l'on fait tourner à l'aide de sa souris. Tout

le monde en a déjà vu, c'est assez spectaculaire, quand le sujet

s'y prête. Depuis les débuts, le format s'est enrichi :

- du panorama multinodes (en cliquant sur une zone définie du panorama,

on passe à un autre, comme dans cette belle balade en Corse http://evm.vr-consortium.com/regions/zzf/commun/framclub.htm

- du format Cubic (en plus de la dimension horizontale, on peut voir une scène

également à 360° en vertical) http://www.apple.com/quicktime/gallery/cubicvr/

- du son panoramique http://www.axisimages.com/BelAirHotel_qtvr/mid.html

- des apports de Flash http://blueabuse.totallyhip.com/flash/

http://www.yamashirorestaurant.com/tour/index.html

- de Zoomify, http://www.zoomify.com/

http://www.pricewestern.com/iqtvra/boulder/Zoomify_Pg.html

Le superbe baptistère de Parme http://vrm.vrway.com/projects/parma/

Applications :

- architecture/immobilier (visite virtuelle d'une maison),

- tourisme (paysages, monuments, sites historiques, galeries d'art)

À l'intérieur de la pyramide de Khufu, Égypte http://www.pbs.org/wgbh/nova/pyramid/explore/khufuenter.html

Les salles du musée du Louvre, un classique http://www.louvre.or.jp/louvre/QTVR/francais/index.htm

Une présentation originale d'oeuvres d'art http://www.axisimages.com/vrviewer/index.html

Les sites classés au Patrimoine Mondial de l'Humanité par l'Unesco,

superbe collection de VRs

http://preview.whtour.net/list.html

avant que tout cela ne devienne que du virtuel, comme ce palais chinois, qui

n'existe plus, détruit par un incendie http://preview.whtour.net/asia/cn/wudangshan/news.html

- industries (intérieur d'automobiles,...) http://www.buick.com/lesabre/photos/360/

Matériel : un appareil photo numérique doté d'un objectif

grand-angle (équivalent d'un 28 ou 35 mm), fixé à un pied-photo

à rotule graduée. Le plus simple est de prendre une photo en verticale

tous les 22,5°, soit 16 photos en tout, la gestion des raccords entre vues

étant optimale. Mais plus que la technique (passé l'apprentissage,

c'est très facile en fait), l'intérêt, et donc la réussite

de votre panorama VR, est lié au choix de votre point nodal, l'endroit

d'où vous prendrez votre séquence de photos, ainsi que les conditions

de lumière (c'est toujours de la photo). Quelques essais et repérages

sont souvent nécessaires, au début.

Logiciels : VR Worx 2.1 http://www.vrtoolbox.com/vrthome.html (le plus simple

pour débuter)

Stitcher http://www.realviz.com/products/st/index.php (plus préçis,

permet de faire aussi du CubicVR)

Quelques sites excellents, à explorer :

VRWay - http://vrm.vrway.com/

Axis Images http://www.axisimages.com/home.html

http://www.home.earthlink.net/~robertwest/coolstuff.html

Liens http://www.iqtvra.org/iqtvra/docs/en/index.php?includeFile=4.Showcase.dir/1.Member_Gallery.php

http://www.360geographics.com/index.html

Tutoriels: http://www.letmedoit.com/qtvr/qtvr_online/course_index.html

Le QuickTime VR Objet , moins utilisé, est pourtant intéressant.

Cette fois, l'appareil photo est fixe, et l'objet à photographier est

posé sur un plateau rotatif, que l'on tourne à intervalles réguliers.

À l'écran, l'objet peut alors être scruté sous toutes

ses facettes, avec, comme pour le panorama, la possiblité de zoomer sur

un détail. Idéal pour le site d'un antiquaire ou d'un bijoutier,

ou pourquoi pas, pour présenter une collection de robes de mariée,

des modèles réduits ou des bottes de chasse. Jusqu'aux plus bizarres

http://www.apple.com/hardware/gallery/imac_july2002_320.html

Juste qqs exemples parmi beaucoup d'autres :

http://aroundquicktime.free.fr/bouddha.html

http://www.nass.de/demos/ars/Tut_jpg25_164k.mov

http://www.synthetic-ap.com/qtvr/samples/ashley2.mov

La magie, parfois, opère: http://www.axisimages.com/gallery_2001/danse/danse.html

(trouvez la bonne vitesse de rotation).

Puisqu'on est dans les tours de magie :

http://www.holonet.khm.de/Magisterium/Magisterium.html

http://www.outline.be/magie/myst1.html (vider le cache entre chaque tour)

Je vous donne pour finir qqs liens généralistes sur QuickTime,

pour aller plus loin :

http://www.qtbridge.com/index.html

http://blueabuse.totallyhip.com/tut/

http://www.quicktiming.org/

Et un exemple de ce qu'on peut faire avec LiveStage Pro

http://www.navicast.net/articles/en/1080/

http://www.totallyhip.com/

--------------------

panØgraph -photographies panoramiques à 360°

panoramas.dk/

Gotacom.com

san francisco

Miami

avions

une page de liens

360° ressources.de (superbe !)

http://www.arnaudfrichphoto.com.

D'autres tutoriaux pour apprendre et se perfectionner

:

http://www.outsidethelines.com/EZQTVR.html

http://www.letmedoit.com/qtvr/qtvr_online/course_index.html

http://www.flipsidestudios.com/info/DIfeature.html

http://vrm.vrway.com/vartist/showcase/QTVR_tutorials.html

http://www.designer-info.com/Writing/producing_panoramas.htm

http://www.creativepro.com/printerfriendly/story/12727.html

http://www.virtualdenmark.dk/fullscreen/