eScience Lectures Notes : .

Virtual Reality : The Sight

Source of information for this chapter

Le traité de la réalité virtuelle

By Philippe Fuchs, Guillaume Moreau, Jean-Paul Papin

Préfacé par Alain Berthoz, Professeur au Collège de France

http://www.ensmp.fr/Fr/Services/PressesENSMP/Collections/ScMathInfor/Livres/TraiteRealiteVirtuelle.html

Optical Illusions

Optical Illusions (2)

Count the black dots!

Source : http://www.sylloge.com/misc_bin/illusion.html

Optical Illusions (3)

Are the horizontal lines parallel or do they slope?

Source : http://weirdillusion.tripod.com/illusion2.html

Optical Illusions (4)

How Many Legs Does The Elephant Have ?

Source : http://weirdillusion.tripod.com/illusion3.html

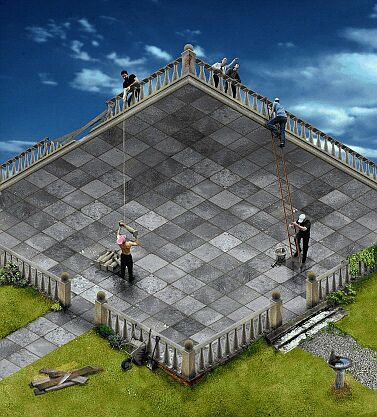

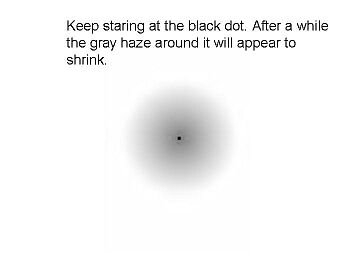

Optical Illusions (5)

Source :

Optical Illusions (6)

|

YELLOW BLUE ORANGE BLACK RED GREEN PURPLE YELLOW RED ORANGE GREEN BLACK BLUE RED PURPLE GREEN BLUE ORANGE Left-right conflict Your right brain tries to say the colour butyour left brain insists on reading the word. |

Source : http://giraffian.com/trivia/colours.shtml

Optical Illusions (7)

Is The Book Looking Towards You ... Or Away From You?

Source : http://www.annexed.net/box/escher/oi/tour5.html

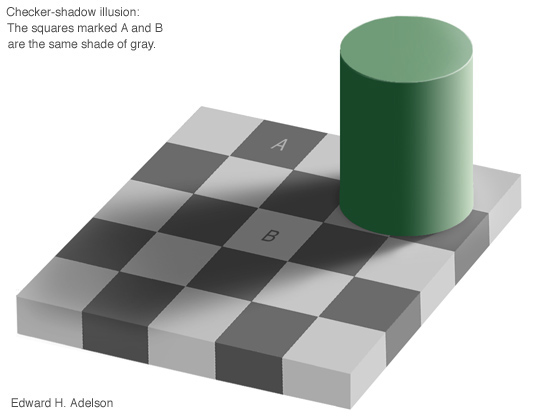

Optical Illusions (8)

A light check in the shadow is the same gray as a dark check outside the shadow.

Source : http://www-bcs.mit.edu/people/adelson/checkershadow_illusion.html

Virtual Reality : The Sight

Technocentric Designer Diagram

Our Visual System

Extract from http://cs.wpi.edu/~matt/courses/cs563/talks/brian1.html by Brian Lingard

We obtain most of our knowledge of the world around us

through our eyes. Our visual system processes information in two distinct ways

-- conscious and preconscious processing. When we are looking at a photograph,

or reading a book or map requires conscious visual processing and hence usually

requires some learned skill. Preconscious visual processing, however, describes

our basic ability to perceive light, color, form, depth and movement. Such processing

is more autonomous, and we are less aware that it is happening.

Physically our eyes are fairly complicated organs. Specialized cells form structures

which perform several functions -- the pupil acts as the aperture where muscles

control how much light passes, the crystalline lens performs focusing of light

by using muscles to change it's shape, and the retina is the workhorse converting

light into electrical impulses for processing by our brains. Our brain performs

visual processing by breaking down the neural information into smaller chunks

and passing it thoguh several filter neurons. Some of these neurons detect only

drastic changes in color, others neurons detect only vertical edges or horizontal

edges.

Depth information is conveyed in many different ways. Static depth cues include

interposition, brightness, size, linear perspective, and texture gradients.

Motion depth cues come from the effect of motion parallax, where objects which

are closer to the viewer appear to move more rapidly against the background

when the head is moved back and forth. Physiological depth cues convey information

in two distinct ways -- accommodation, which is how our eyes change their shape

when focusing on distant objects, and convergence, which is a measurement of

how far our eyes must turn inward when looking at objects closer than 20 feet.

We obtain stereoscopic cues by extracting relevant depth information by comparing

the left and right views coming each of our eyes.

Our sense of visual immersion in VR comes from several factors which include

field of view, frame refresh rate, and eye tracking. Limited field of view can

result in a tunnel vision feeling. Frame refresh rates must be high enough to

allow our eyes to blend together the individual frames into the illusion of

motion and limit the sense of latency between movements of the head and body

and regeneration of the scene. Eye tracking can solve the problem of someone

not looking where their head is oriented. Eye tracking can also help to reduce

computational load when rendering frames, since we could render in high resolution

only where the eyes are looking.

The sense of virtual immersion is usually achieved via some means of position

and orientation tracking. The most common means of tracking include optic, ultrasonic,

electromagnetic, and mechanical. All of these means have been used on various

head mouted display (HMD) devices. HMDs come in three basic varieties including

stereoscopic, monocular, and head coupled. The earliest stereoscopic HMD was

Ivan Sutherland's Sword of Damocles, which was built in 1968 while he was a

student at Harvard. It got its name from the large mechanical position sensing

arm which hung frm the ceiling and made the device ungainly to wear. NASA has

built several HMDs, chiefly using LCD displays which had poor resolution. The

University of North Carolina has also built several HMDs using such items as

LCD screens, magnifying optics and bicycle helmets. VPL Research's EyePhone

series were the first commercial HMDs. A good example of a monocular HMDs is

the Private Eye by Reflection Technologies of Waltham MA. This unit is just

1.2 x 1.3 x 3.5 inches and is suspended by a lightweight headband in front of

one eye. The wearer sees a 12-inch monitor floating in mid air about 2 feet

in front of them. The BOOM is head coupled HMD and was developed at NASA's Ames

Research Center. The BOOM uses two 2.5 inch CRTs mounted within a small black

box that has two hand grips on each side and is attached to end of articulated

counter-balanced arms serving as position sensing.

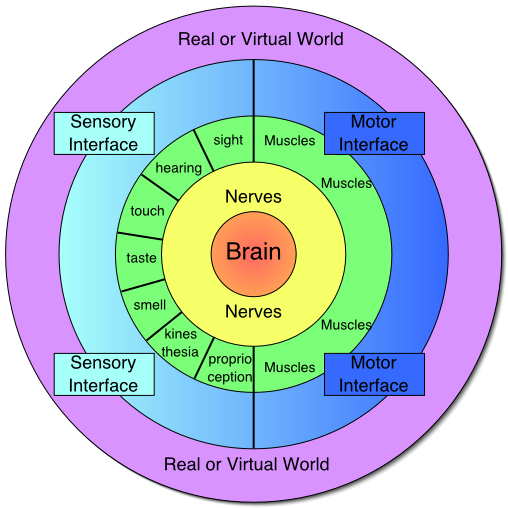

Anthropocentric User-Diagram

Virtual Reality : The Sight : Visual Interfacing

ImmersionInput |

|

InteractivityOutput |

The Human Eye

NB. : The eye : an analog-digital converter ...

When light get to cones and rods cells, it creates a electric potential. Above

a given level (sensitivity of the cell), a action potential is transmitted from

one nervous cell to another. Height and length of the action potential are constant,

only the frequency (number of potential) varies regarding the intensity of the

the stimulus (here the light).

At that level, we already may notice that two things may inform the human of the distance of an object : the contraction of ciliary muscles (focus), and the orbital muscle that position the orientation of the eye (stereoscopic view).

The eye is essentially an opaque eyeball filled with a water-like fluid. In the front of the eyeball is a transparent opening known as the cornea. The cornea is a thin membrane which has an index of refraction of approximately 1.38. The cornea has the dual purpose of protecting the eye and refracting light as it enters the eye. After light passes through the cornea, a portion of it passes through an opening known as the pupil. Rather than being an actual part of the eye's anatomy, the pupil is merely an opening. The pupil is the black portion in the middle of the eyeball. It's black appearance is attributed to the fact that the light which the pupil allows to enter the eye is absorbed on the retina (and elsewhere) and does not exit the eye. Thus, as you sight at another person's pupil opening, no light is exiting their pupil and coming to your eye; subsequently, the pupil appears black.

Like the aperture of a camera, the size of the pupil opening

can be adjusted by the dilation of the iris. The iris is the

colored part of the eye - being blue for some people and brown for others (and

so forth); it is a diaphragm which is capable of stretching and reducing the

size of the opening. In bright-light situations, the iris is dilated to reduce

the size of the pupil and limit the amount of light which enters the eye; and

in dim-light situations, the iris adjusts its size so as to maximize the size

of the pupil and increase the amount of light which enters the eye.

Light which passes through the pupil opening, will enter the crystalline lens. The crystalline lens is made of a fibrous, jelly-like material which has an index of refraction of 1.44. Unlike the lens on a camera, the lens of the eye is able to change its shape and thus serves to fine-tune the vision process. The lens is attached to the ciliary muscles. These muscles relax and contract in order to change the shape of the lens. By carefully adjusting the lenses shape, the ciliary muscles assist the eye in the critical task of producing an image on the back of the eyeball.

The inner surface of the eye is known as the retina. The retina contains the rods and cones which serve the task of detecting the intensity and the frequency of the incoming light. An adult eye is typically equipped with 120 million rods which detect the intensity of light and 6 million cones which detect the frequency of light. These rods and cones send nerve impulses to the brain. The nerve impulses travel through a network of nerve cells; there are as many as one-million neural pathways from the rods and cones to the brain. This network of nerve cells is bundled together to form the optic nerve on the very back of the eyeball.

Each part of the eye plays a distinct part in enabling humans to see. The ultimate goal of such an anatomy is to allow humans to focus images on the back of the retina.

Extract from http://www.physicsclassroom.com/Class/refrn/U14L6a.html

The Human Eye : Main Characteristics of the Sight

The Human Sight is a complex sens

Its characteristics varies depending on the surrouding light, connected sens input...

The Purview (Field of View), when eyes are motionless :

-

HOR : 90° (temple) + 45° (nose) = 135° (with only a 2° high resolution central cone)

-

VER : 45° (up) + 70° (down) = 115°

-

Binocular vision : 100°-120°

An eye can move 15° in all direction at 600°/s

The Purview , when head is motionless :

-

HOR : 170° for one eye (NB. 120° for very good HMD), 210° for both (~ 200°)

-

VER : 145°

The Purview , when head moving (for one eye) :

-

HOR : 200° (temple) + 130° (nose) = 330°

-

VER : 140° (up) + 170° (down) = 310°

An head can move at 800°/s

Visual Acuity : between 30” to 2’ of angle

The Human Eye : Main Characteristics of the Sight (2)

Total 15M pixels over the FOV of 4/3PI steradians, most dense in fovea. (about 1/3rd of a full sphere : 4PI steradian)

Visual Acuity : between 30” to 2’ of angle ... let's memorize 1'

Pixel resolution

|

|

1 ' : 0.1mn at 35 cm (0.0001/tan(1/60) = 0.34)

A screen at 35 cm, 25 cm, with a 40° FOV should have 2500 pixels (and not only 1024) !

Stereoscopique Acuity : around 0.1 milliradian : Delta r = 0.001 . r2 (in m)

-

1mm at a distance of 1 m

-

0.1 m at a distance of 10 m

Maximim perceptible frequency : 25-30 hz

It is easier to perceive a slight difference than an absolute value

-

Abslolute colour vs. comparaison of two colours

-

Perception of a distance vs comparaison of the depth of two close object (within the fov)

What we "look at" is not about a simple picture printed on the retina

Before 70 philosophical (Kant) duality/separation between perception and interpretation (eye/cortex)... now, more complex organisation, with specialised aera (frequency perception, color contraste, color and low resolution intensities, move and stereoscopic depth perception ...)

Average IPD (InterPupillary Distance) : 65 mm for adulte

(between 50 and 70mm for european population)

The center-to-center distance between an adult person's eyes averages. The binocular and of course HMD, should be capable of any setting between those two measurements.

What about Depth Perception ?

What about one-eyed ?

Depth Perception in monocular sight (1)

-

Light (Shading) and Shadows

Light (Shading) and Shadows

Shading gives information about the shape of an object, Shadows are a form of shading that indicate the positional relationship between two objects.

Depth Perception in monocular sight (2)

-

Light (Shading) and Shadows

-

Relative dimensions (and their cognition)

Relative dimensions (and their cognition)

|

|||

|

|||

|

|||

We compare the Size of objects with respect to the other objects of the same type to determine the relative distance between objects (ie. the larger is presumed closer). We also compare the size of object with our memoy of similar objects to approximate how far away the object is from us.

Depth Perception in monocular sight (3)

-

Light (Shading) and Shadows

-

Relative dimensions (and their cognition)

-

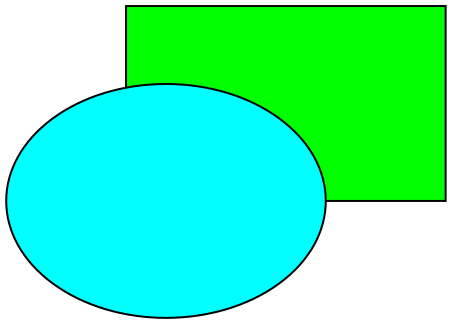

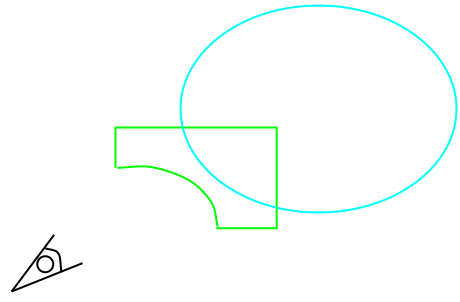

Occlusions / Interposition

Occlusions / Interposition

Interposition is the clue we receive when one object occludes our view of another. If one object masks another, then it is probably closer.

Depth Perception in monocular sight (4)

-

Light (Shading) and Shadows

-

Relative dimensions (and their cognition)

-

Occlusions

-

Texture Gradient of a Surface

Texture Gradient of a Surface

Texture gradient is apparent because our retinas cannot discern as much detail of a texture at a distance as compared with up close.

When standing in a grassy field, the high details of the grass's texture at your feet changes to a blur of green in the distance

Depth Perception in monocular sight (5)

-

Light (Shading) and Shadows

-

Relative dimensions (and their cognition)

-

Occlusions / Interposition

-

Texture Gradient of a Surface

-

Atmospheric effects : Visibility Variation

(Fog effect in external scenes)

Atmospheric effects : Visibility Variation

(Fog effect in external scenes)

Depth Perception in monocular sight (6)

-

Light (Shading) and Shadows

-

Relative dimensions (and their cognition)

-

Occlusions / Interposition

-

Texture Gradient of a Surface

-

Atmospheric effects : Visibility Variation

-

Linear Perspective

Linear Perspective

Linear perspective is the observance that parallel lines converge at a single vanishing point. The use of this cue relies on the assumption that the object being viewed is constructed of parallel lines, such as most buildings and roads for instance.

Depth Perception in monocular sight (7)

-

Light (Shading) and Shadows

-

Relative dimensions (and their cognition)

-

Occlusions / Interposition

-

Texture Gradient of a Surface

-

Atmospheric effects : Visibility Variation

-

Linear Perspective

-

Height in Visual Field

derives from the fact that the horizon is higher in the visual field than the ground near our feet. Therefore, the further a object is from us, the higher it will appear in our view

Depth Perception in monocular sight (8)

-

Light (Shading) and Shadows

-

Relative dimensions (and their cognition)

-

Occlusions / Interposition

-

Texture Gradient of a Surface

-

Atmospheric effects : Visibility Variation

-

Linear Perspective

-

Height in Visual Field

-

Brightness

provides a moderate depth cue. Barring other information, brighter objects are perceived as being closer

Depth Perception in monocular sight

Monoscopic Image Depth Cues

-

Light (Shading) and Shadows

-

Relative dimensions (and their cognition)

-

Occlusions / Interposition

-

Texture Gradient of a Surface

-

Atmospheric effects : Visibility Variation

-

Linear Perspective

-

Height in Visual Field

-

Brightness

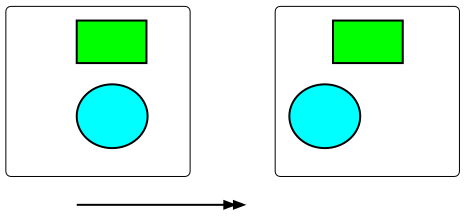

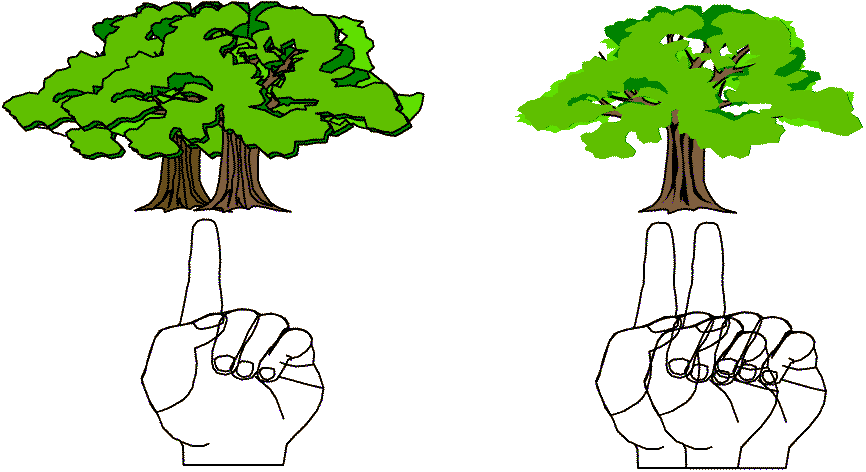

Motion depth cues : Parallax

Motion depth cues : Parallax : Relative move

Motion depth cues come from the parallax created by the changing relative position between the head and the object being observed (one or both may be in motion). Depth information is discerned from the fact that objects that are nearer to the eye will be perceived to move more quickly across the retina than more distant objects.

Move of the viewer body is more important than the move of the object because of the proprioceptic feedback telling them how far they moved. When viewers cannot determine the rate of the relative movement between themselves and the object, their judgment is less precise.

Even a slight rotation of the head allows you to use that sort of cue

Depth Perception

Monoscopic Image Depth Cues

- Light (Shading) and Shadows

- Relative dimensions (and their cognition)

- Occlusions / Interposition

- Texture Gradient of a Surface

- Atmospheric effects : Visibility Variation

- Linear Perspective

- Height in Visual Field

- Brightness

Motion depth cues : Parallax

Stereoscopic image depth cue (Binocular Vision)

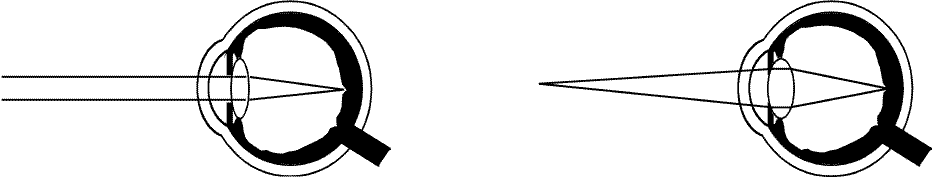

Physiological depth cue

-

Accommodation (ciliary muscles, focus)

-

Convergence (orbital muscle) (Binocular Vision)

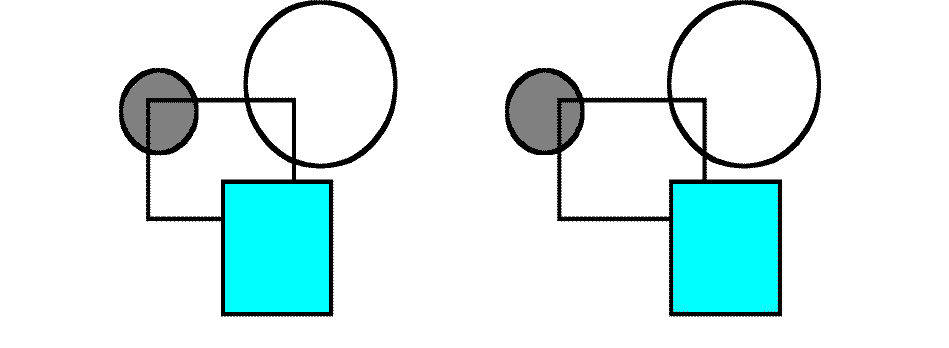

Accommodation

Accommodation to the infinity |

Accomodation to a short distance |

Accommodation is the focusing adjustment made by the eye to change the shape of its lens (contraction of ciliary muscles => variation of convergence / focal length of the crystalline lens). The amount of muscular change provide distance information for object within 2 or 3 m.

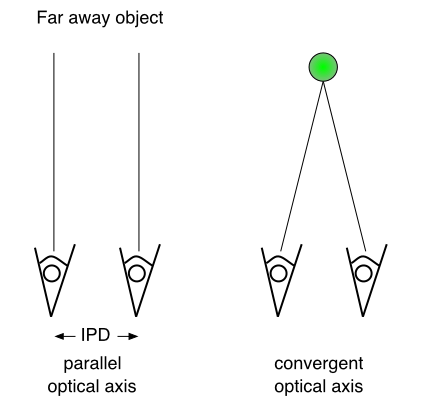

Convergence is the movement of the eyes to bring an object into the same location of the retina of each eyes. The orbital muscle movement used for convergence provide information to the brain on the distance of the object in view.

Convergence

Convergence is the movement of the eyes to bring an object into the same location of the retina of each eyes. The orbital muscle movement used for convergence provide information to the brain on the distance of the object in view.

Accommodation Vs. Convergence

The Brain has learnt that a relationship exist between Accommodation and Convergence

That relation is broken in stereoscopic VR system

We would need to know where the user is looking at to build a correct system

One othe the raison of VR sickness

(one that would implement dynamic variable focal length lens !)

Convergence and retinal disparity

Your nose in not only in the middle of your face, but it is too, always, in the middle of your view

One of the 2 eyes is dominant

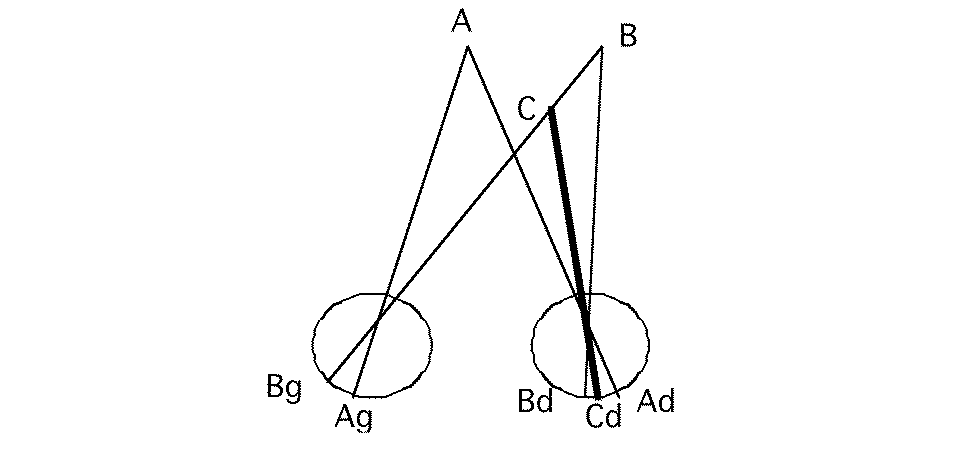

Retinal disparity : Stereopsis

Stereoscopic Image Depth Cue

Stereopsis is derived from the parallax between the different images received by the retina in each eye (binocular disparity). The stereoscopic image depth cue depends on parallax, which is the apparent displacement of objects viewed from different locations. Stereospsis is particularly effective for objects within 5 m. It is especially useful when manipulating objects within arms reach

Thanks to VR, it is is now possible to manipulate very distant objects. But if we want to let that manipulation be easy to do, we need to virtually bring back objects within the stereostcopic area of our view !

Depth Perception : different importances od cues

Not all depth cues have the same priority. Stereopsis is a very strong depth clue. When in conflict with other depth cues, stereopsis is typically dominant.

Relative motion is perhaps the one depth cue that can be as strong or stronger than stereopsis.

Of the static monoscopic image depth cues, interposition is the strongest.

The physiological depth cues are perhaps the weakest. Accordingly, if one were to eliminate stereopsis by covering one eye, attempting to rely only on accomodation would be rather difficult.

Some depth cue are ineffective beyond a certain range. The range of stereopsis extends about 5m, and accomodation extends up to 3 m. So for more distant objects, theses cues have very low priority.

Monoscopic Image Depth Cues

- Light (Shading) and Shadows

- Relative dimensions (and their cognition)

- Occlusions / Interposition

- Texture Gradient of a Surface

- Atmospheric effects : Visibility Variation

- Linear Perspective

- Height in Visual Field

- Brightness

Motion depth cues : Parallax

Stereoscopic image depth cue (Binocular Vision)

Physiological depth cue

- Accommodation (ciliary muscles, focus)

- Convergence (orbital muscle) (Binocular Vision)

Cheap 3D

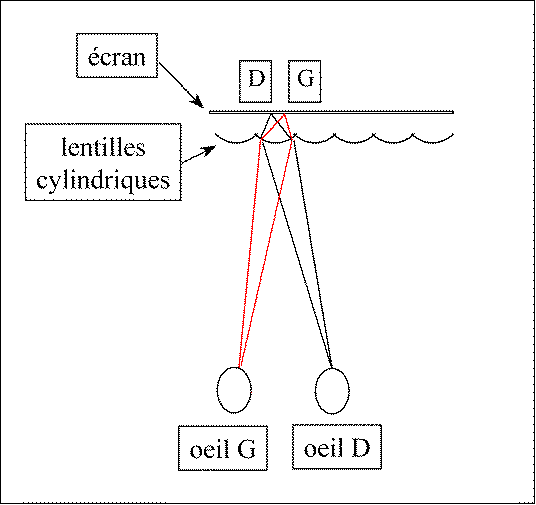

Autostereoscopic screen

Stereoscopy : different options

HMD

Red/Bue

Shutter glasses

Polarized Glasses

Linear

Circular

Wavelength RGB

Ideal Visual Interface

A good Spatial Resolution (see accuity)

A large Field of View

A stereoscopic System

A large Field of Regard (FOR) : immersion of the regard

HMD : 100%

Ideal Visual Interface (2)

Other parameters to consider

Color (3, 2, 1 ...)

Contrast

Brightness

Focal Distance

Masking (by your body) Vs Body Immersion

Head Position Information

Temporal resolution / Graphics latency tolerance

Ideal Visual Interface (3)

Other things to consider

User Mobility

Interface with tracking method

Environment requirements

Associability with other sense displays

Portability

Throughtput

Encumbrance

Safety, hygiene

Cost

Taxonomy

Stationary Displays

Fishtank VR

Projection VR

Head Based Display

Occlusive HMDs

Nonocclusive HMDs

Hand Based Displays

PalmVR

See the CG part of Display devices